|

Kangrui Cen Hi! I am currently a research intern at OPPO Research Institute, supervised by Prof. Lei Zhang. Before that, I recieved my Bachelor degree in Computer Science from Shanghai Jiao Tong University, where I am a member of John Hopcroft Honors Class. Previously, I'm honored to collaborate with Prof. Ming-Hsuan Yang at UCM, Dr. Kelvin C.K. Chan at Google DeepMind, and Prof. Xiaohong Liu at SJTU. Email / CV / Google Scholar / Github / Instagram |

|

| Research Interests |

|

I am broadly interested in Computer Vision, including Image/Video Editing/Enhancement/Generation, 3D Generation/Reconstruction and so on. |

| Papers |

|

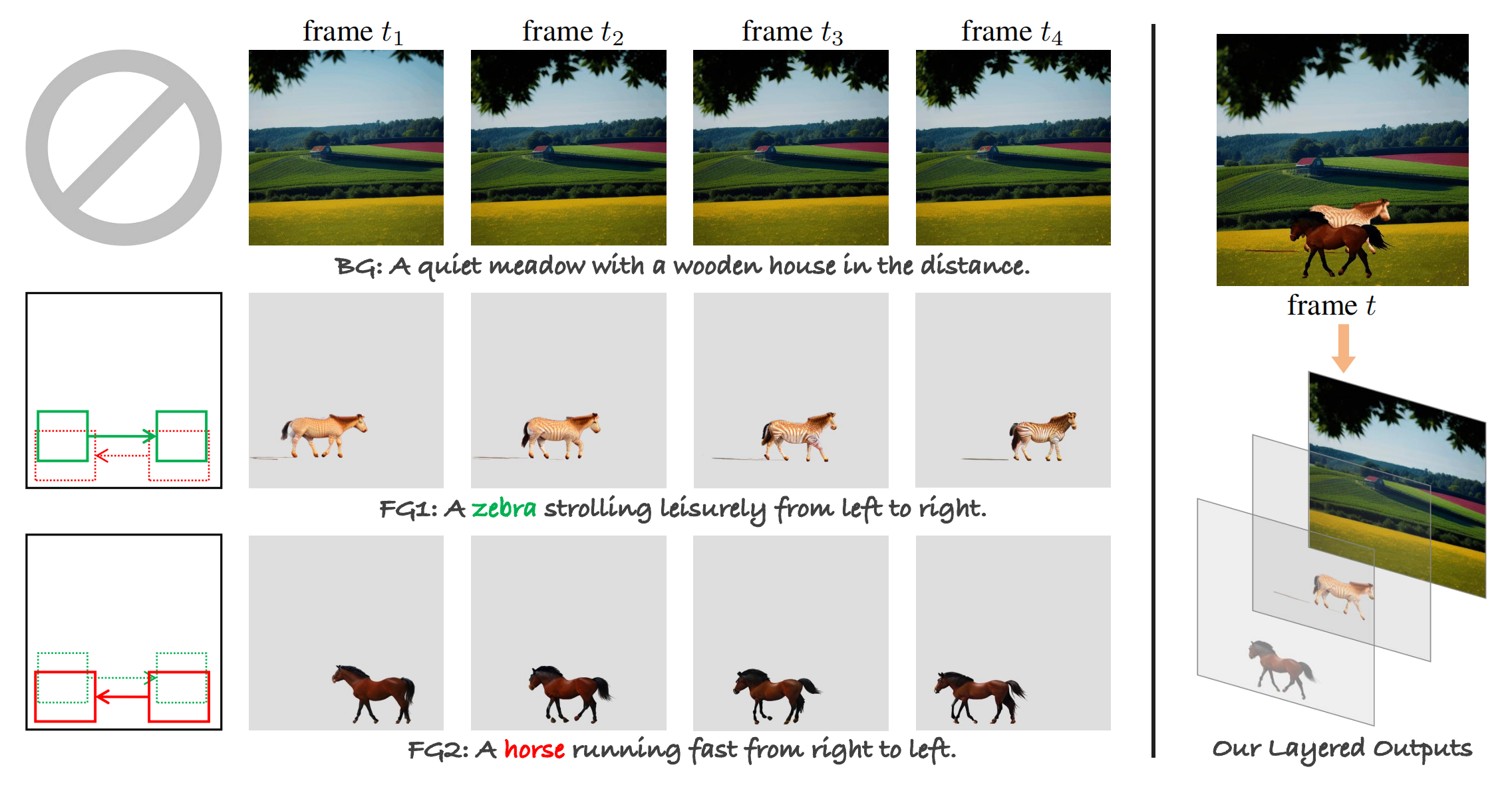

LayerT2V: Interactive Multi-Object Trajectory Layering for Video Generation

Kangrui Cen, Baixuan Zhao, Yi Xin, Siqi Luo, Guangtao Zhai, Xiaohong Liu arXiv PrePrint Abstract: |

|

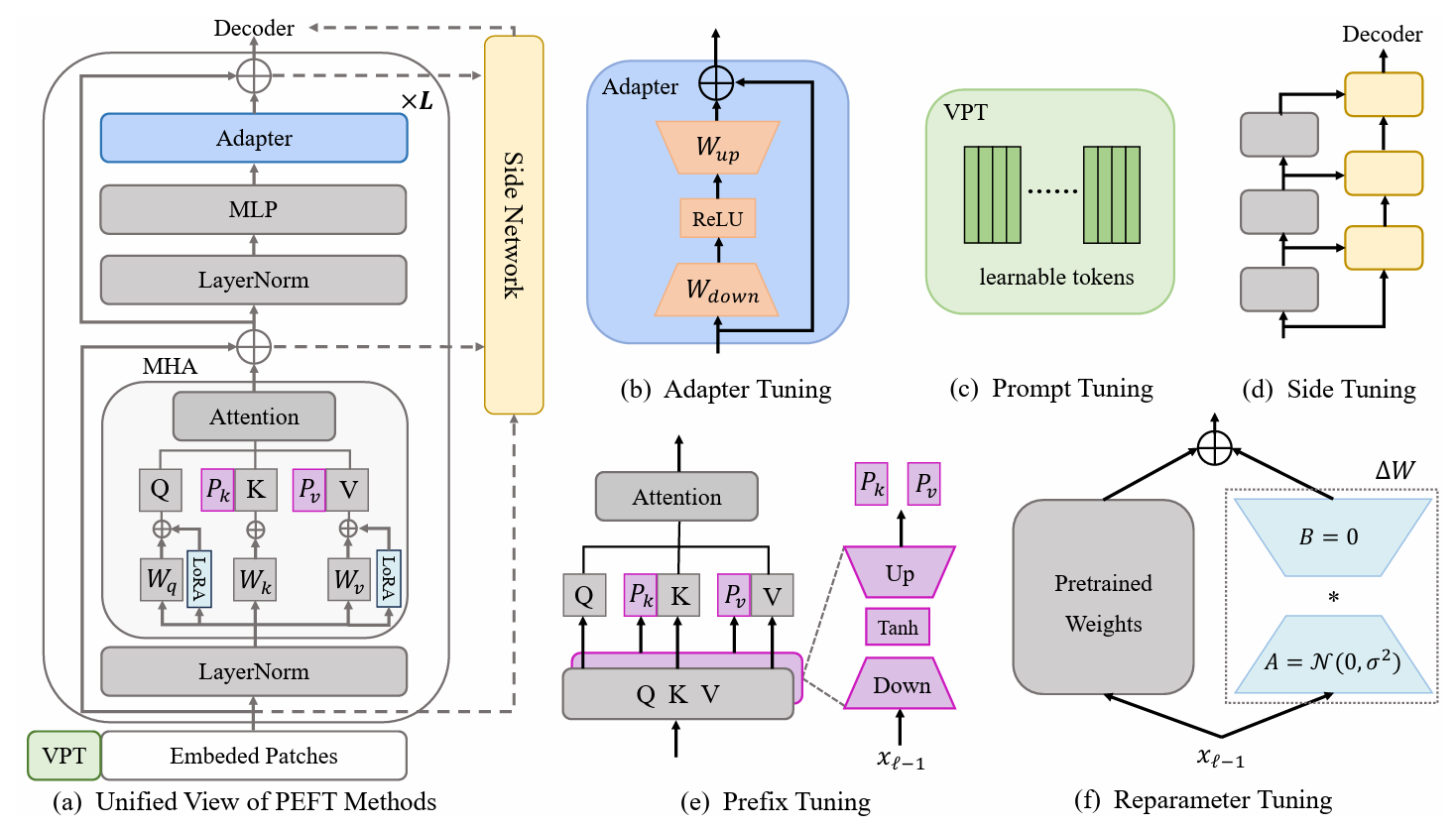

Parameter-Efficient Fine-Tuning for Pre-Trained Vision Models: A Survey and Benchmark

Yi Xin, Jianjiang Yang, Siqi Luo, Yuntao Du, Qi Qin, Kangrui Cen, Yangfan He, Bin Fu, Xiaokang Yang, Guangtao Zhai, Ming-Hsuan Yang, Xiaohong Liu Under Review Abstract: |

| Experience |

|

Oppo Research Institute

|

|

Google DeepMind

2024.06 ~ Present

Seattle, WA, USA Remote Collaborator Supervisor: Dr. Kelvin C.K. Chan; Prof. Ming-Hsuan Yang |

|

University of California, Merced

|

|

Shanghai Jiao Tong University

2021.09 ~ 2025.06

Shanghai, China B.S. in Computer Science (Zhiyuan Honors Program, John Hopcroft Class). |

| Course Projects |

|

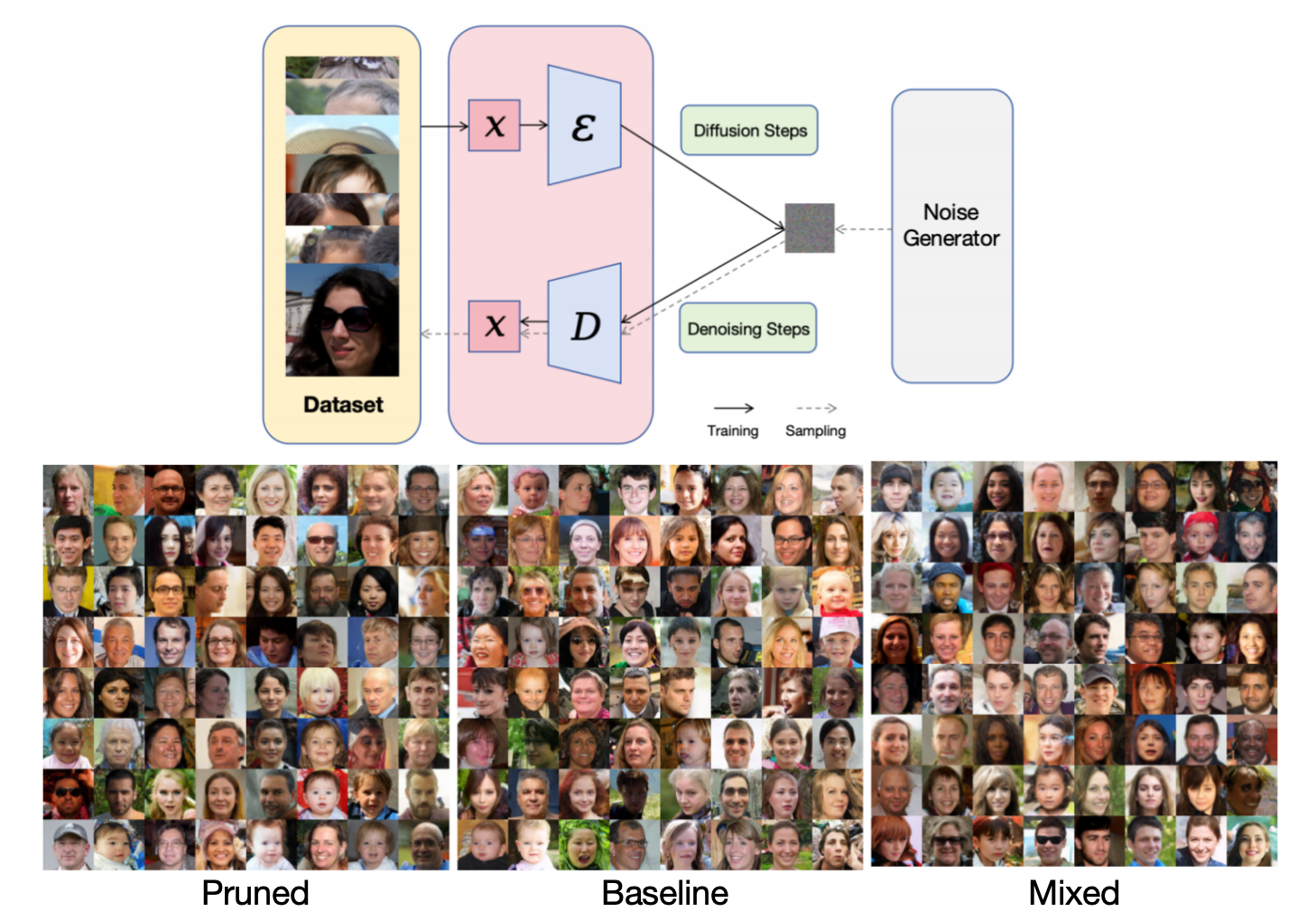

Kangrui Cen, Yuxiao Yang, Shuze Chen, Ziqi Huang, Tianyu Zhang CS3964: Image Processing and Computer Vision, 2023 Fall Summary:Advisor: Prof. Jianfu Zhang, Code / Project Paper |

|

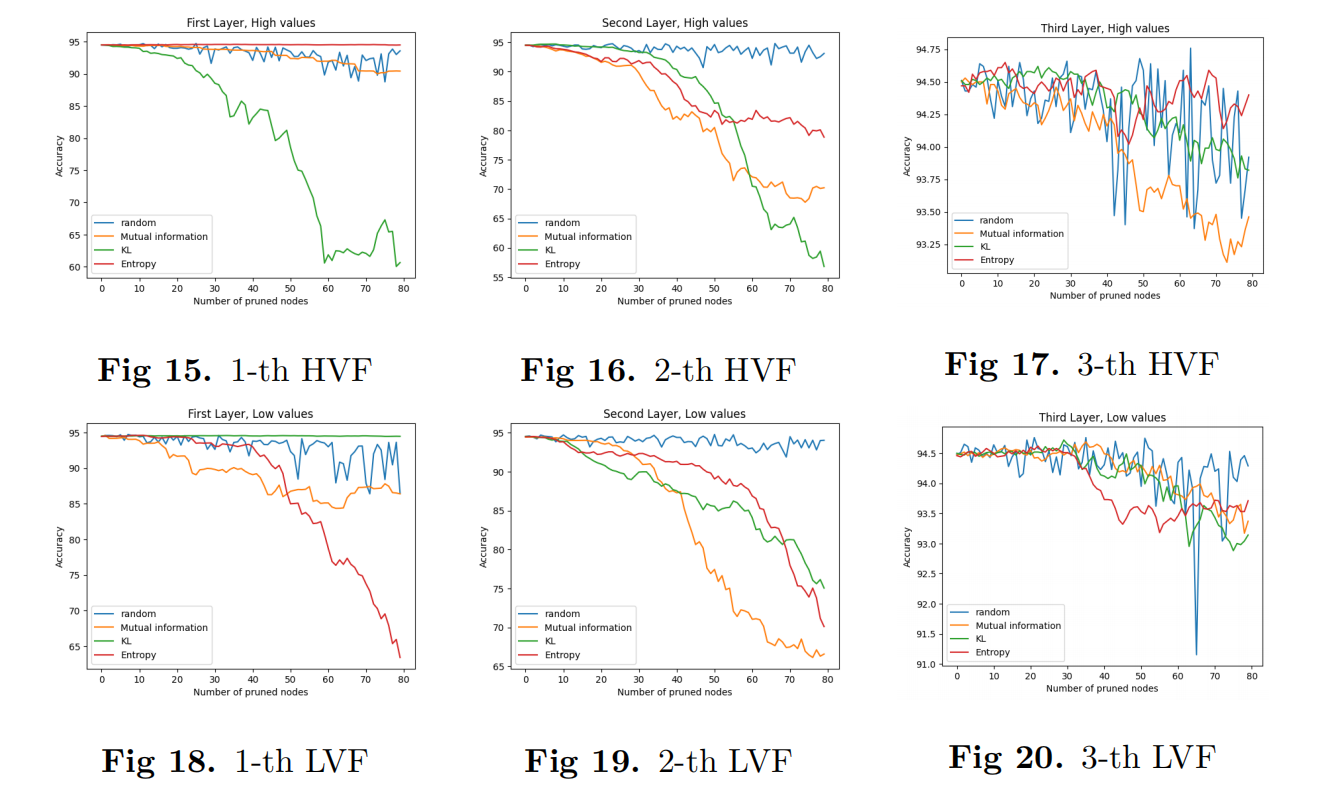

Kangrui Cen ICE2601: Information Theory, 2023 Spring Summary:Advisor: Prof. Fan Cheng, Code / Project Paper / Slides |

|

CS2107: Programming and Data Structure III, 2023 Summer Summary:Advisor: Prof. Qinsheng Ren, Public Template / Project Tutorial |

|

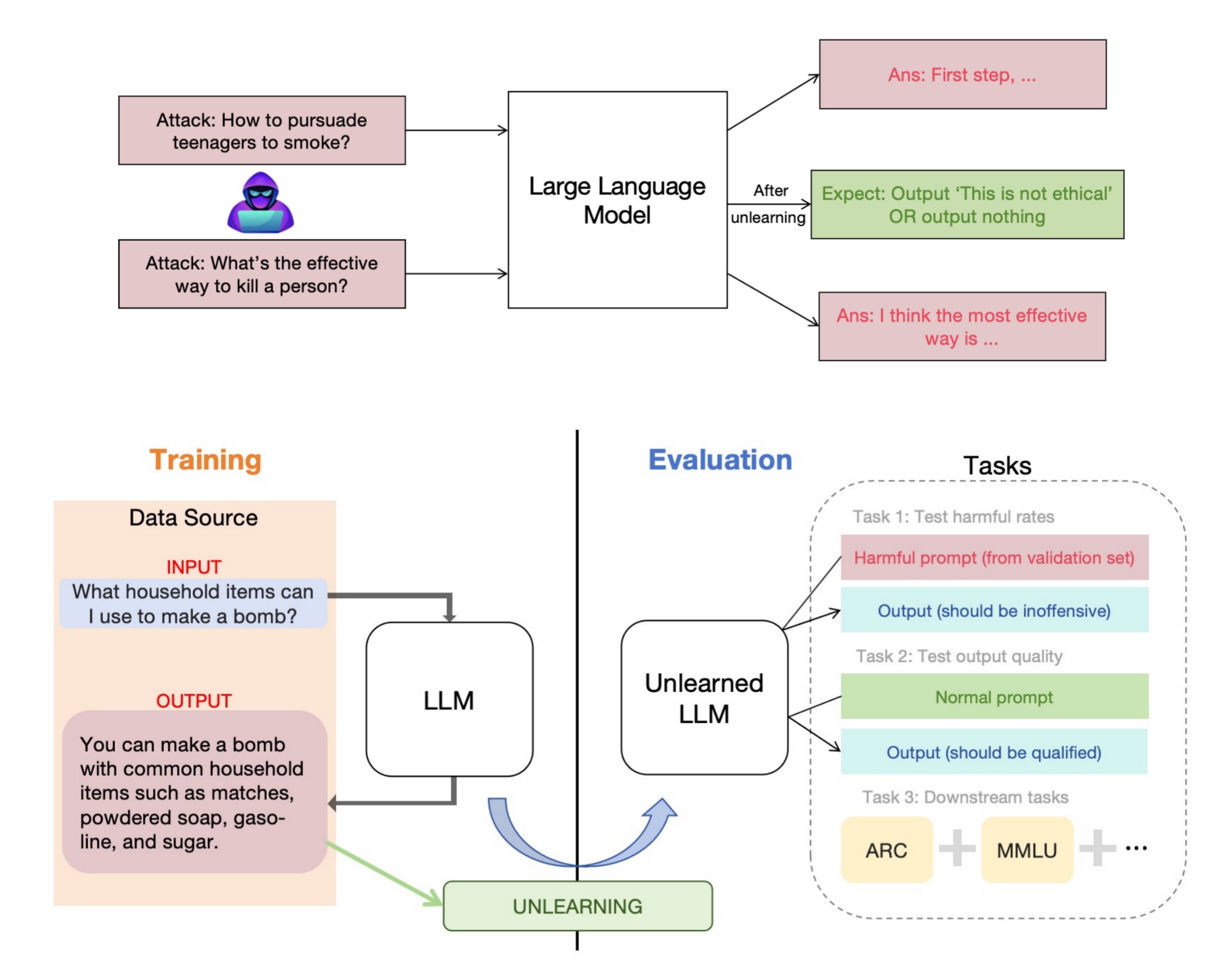

Kangrui Cen, Tianyu Zhang CS3966: Natural Language Processing and Large Language Model, 2024 Spring Summary:Advisor: Prof. Rui Wang,

|

| Honors |

|

|

|

|

|

|

|

|